So your data science project isn’t working

We’ve all been there

Every data science project is a high-risk project at its core. Either you’re trying to predict something no one has predicted before (like when customers will churn), optimize something no one has optimized before (like ads that you will email customers), or try and understand data that no one has looked at before (like trying to figure out why some group of customers are different). No matter what you’re doing youΓÇÿre the first person doing it and it’s always exploratory. Because data scientists are continuously doing new things, you will inevitably hit a point where you find out what you hoped for just isn’t possible. We all have to grapple with our ideas not succeeding. It is heartbreaking, gut-wrenching, and you just want to stop thinking about data science and daydream about becoming a mixed-media artist in Costa Rica (combining metallurgy and glasswork). I’ve been in this field for over a decade and I still have those daydreams.

As an example, consider building a customer churn model. The likely course of events starts with some set of meetings where the data science team convinces executives it is a good idea. The team believes that by using information about customers and their transactions they can predict which customers will leave. The executives buy into the idea and green-light the project. Many other companies have these models and they seem straight forward so it should work.

Unfortunately, once the team starts working on it, reality sets in. Maybe they find out that since the company recently switched systems, transaction data is only available for the past few months. Or maybe when the model is built, it has an accuracy equal to a coin flip. Problems like these build up and eventually the team abandons the project, dismayed.

This describes two thirds of the projects I’ve worked on. Each time I feel awful about myself, with a lingering belief that the project would have worked if only I had been a better data scientist. After this long in the field I am now confident enough that this feeling bubbles up less, but it’s still there. It’s a very natural, but destructive, feeling to have. Despite having beat myself up in these situations, these projects aren’t failing because of me as a data scientist. After experiencing this time and time again, I’ve come to the conclusion that there are three real reasons why data science projects fail:

The data isn’t what you wanted

You can’t look into every possible data source before pitching a project. It’s imperative to make informed assumptions on what is available based on what you know of the company. Once the project starts, you often find out that many of your assumptions don’t hold. Either data doesn’t actually exist, it isn’t stored in a useful format, or it’s not stored in a place you can access.

Since you need data before you can do anything, these are the first problems that arise. The first reaction to this is a natural internal bargaining where you try to engineer around the holes in your data. You say things like: “well maybe a year of data will be sufficient for the model” or “we can use names as a proxy for gender” and hope for the best.

When you pitch a project you can’t predict what the data will look like. Investigating data sources is a necessary part of any data science project. Being a better data scientist won’t help you predict how your company collects data. If the data isn’t there then you can’t science it.

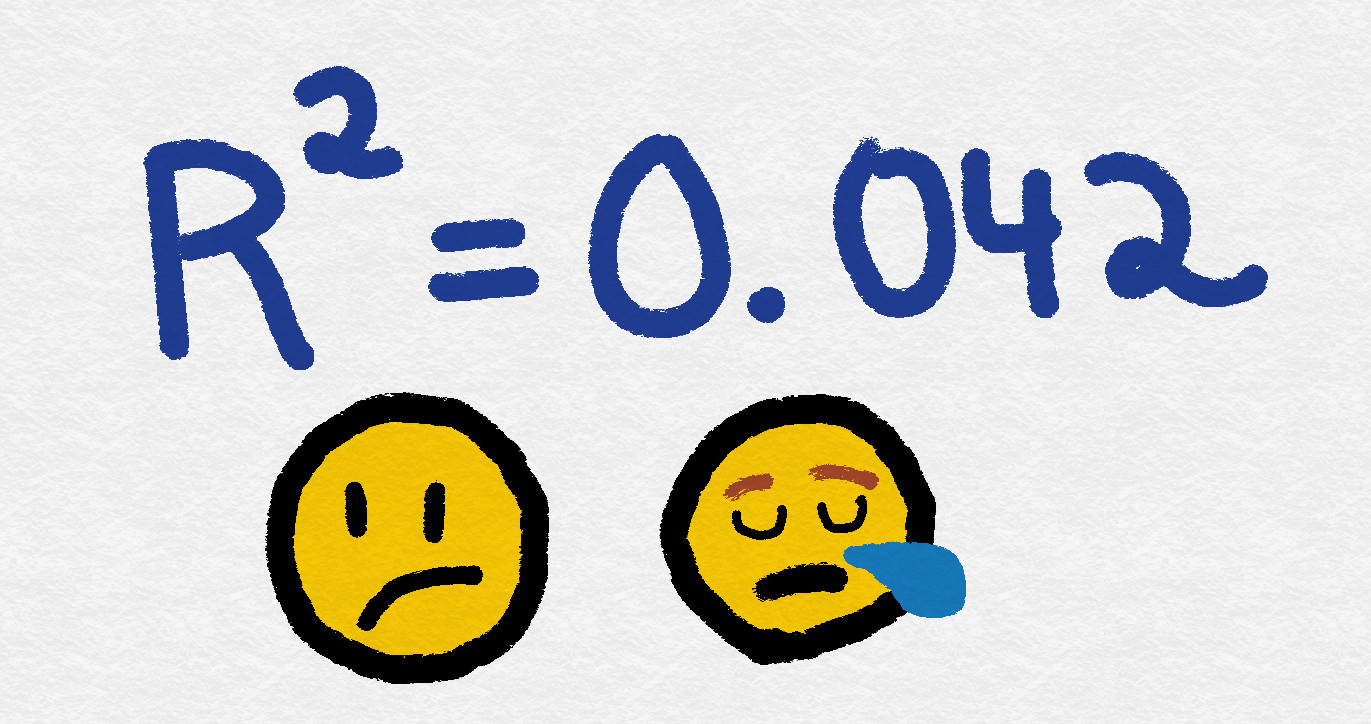

The model doesn’t work well

Once you get a good data set you extract features from it and put it into a model. But when you run it, the results aren’t promising. In our churn example, perhaps your model predicts everyone has the same probability of churning. You tell yourself it’s not working for one of two reasons:

-

The data does not contain a signal in it. Maybe historic transactions don’t tell you which customers will churn. For instance, it would be ridiculous to try and predict the weather based on 10,000 die rolls. Sometimes the data doesn’t informe your prediction.

-

A signal exists, but your model isn’t right. If only you had used a more powerful model or a more cutting edge technique then at last your predictions would be sufficient.

The truth is it’s always always situation 1, and never about you as a data scientist. If the signal exists, you’ll find it ' no matter how mediocre your model. More advanced techniques and having approaches may promote your model from good to great, but it won’t bring it from broken to passable. You have no control over the existence of signals so you want to blame yourself, but trust me that’s the problem. That sucks to figure out! And there is nothing you can do to fix it! Feel free to grieve here.

You weren’t solving the right problem

Sometimes your data is good and your model is effective, but it doesn’t matter. You weren’t solving the right problem. Here is a story from what certainly was not my finest hour. I tried to adapt a crude calculation from Excel into a novel and more accurate machine learning approach. After I had the code working in R and validating the results, I found out that the users were much happier with the Excel approach ≡ƒÿ▒. They didn’t understand my masterpiece! They valued the simplicity and ubiquity of Excel over actual accuracy. My new cool approach was worthless. I found out that if you aren’t delivering what the customer wants your product doesn’t matter, no matter how cool.

They can’t all be winners.

They can’t all be winners.

It’s easy to not understand your customer’s needs. Pick your favorite large company: you can find a project they spent hundreds of millions of dollars on only to find out nobody wanted. Flops happens and they are totally normal. Flops will happen to you and it’s okay! You can’t avoid them, so accept them and let them happy early and often. The more quickly you can pivot from a flop, the less of a problem they’ll be.

You can’t control what data is stored by your company. You can’t make the data contain the signals you want. You can’t know the best problem to solve before you try and solve it. None of these are related to your abilities as a data scientist. Feeling upset about these things is natural! For some reason people like to hype up data science as full of easy wins for companies, but in reality that isn’t the case. Over time you will grow as a data scientist. You will get better at understanding the potential risks, but you can never avoid them fully. Take care of yourself and remember that you’re doing the best with what you got. If becomes too much you can always be a mixed-media artist in Costa Rica.

[Update, November 12th, 2018]

Forced myself to write some positive notes on my "failed" data science project. I had so much trouble in the beginning with thinking of only 2, in the end I managed to write down 7 things we collectively learned from this project #successinfailure #datascience #rstats

— Dewi Koning (@DewiKoning) November 12, 2018

Dewi Koning made a great tweet about her own experience with “failed” data science projects. As she points out in her tweet, one of the best things you can do after your project didn’t work is catalog all of the things you’ve learned from it. Often over the course of a project you’ll learn about new data sets, learn why ideas aren’t practical, or learn more about the user’s true needs. These lessons are great for avoiding failed projects in the future, and often experts in the field get their knowledge from repeatedly failing until they know exactly what to avoid.

Want to go deeper? You can check out a 40-minute talk on projects failing that I gave at PyData Ann Arbor.

If you want a ton of ways to help grow a career in data science, check out the book Emily Robinson and I wrote: *Build a Career in Data Science*. We walk you through getting the skills you need the be a data scientist, finding your first job, then rising to senior levels.